At some point of time in my career, I was in a role that I would prepare scripts for auto-refresh the database by running some SQL migration scripts, so that the database will match the latest binaries.

Concept

Here is my definition of re-runnable SQL scripts.

A SQL script is re-runnable if and only if the script will only have impact to the SQL server exactly once regardless how many times you run the script.

This is a very important aspect if we are able to re-run some upgrading scripts without worrying about what-to-run and what-not-to-run during developments or deployments. Let alone continuous integration.

Implementation

There is a pattern in re-runnable SQL script. I will try to document as much as I could, but if you could learn the pattern instead of the code, then you can use it in any DDL (Data Definition Language) or DML (Data Manipulation Language).

Add column to existing table

If not exists(Select 1 from sys.columns where Name = N'PublishDate' and Object_ID = Object_ID(N'BlogPost'))

Begin

Alter Table dbo.BlogPost

Add PublishDate datetime NULL

Print 'INFO - Column PublishDate is added for table BlogPost successfully.'

End

Else

Begin

Print 'WARNING - Column PublishDate already exists for table BlogPost.'

End

Go

Pay attention to the if-else logic, we simply put our DDL inside a if clause. Too simple?

If we run the above script twice, you would expect it not running the second time.

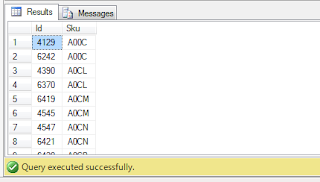

|

| As we can see in the result pane, the DDL will not run the second time. |

Create table

If not exists(Select 1 from sys.tables where Name = N'Fit')

Begin

CREATE TABLE [dbo].[Fit](

[Id] [int] IDENTITY(1,1) NOT NULL,

[Code] [varchar](50) NOT NULL,

[Name] [varchar](255) NOT NULL,

[SubjectToAcl] [bit] NOT NULL CONSTRAINT [DF_Fit_SubjectToAcl] DEFAULT ((0)),

[LimitedToStores] [bit] NOT NULL CONSTRAINT [DF_Fit_LimitedToStores] DEFAULT ((0))

) ON [PRIMARY]

Print 'INFO - Table Fit created successfully.'

End

Else

Begin

Print 'WARNING - Table Fit already exists.'

End

Go

Add PK

If not exists(Select 1 from sys.key_constraints where parent_object_id = Object_id('Fit'))

Begin

ALTER TABLE dbo.Fit

ADD CONSTRAINT PK_Fit PRIMARY KEY CLUSTERED (Id) ON [PRIMARY]

Print 'INFO - PK Fit added successfully.'

End

Else

Begin

Print 'WARNING - PK Fit already exists.'

End

GO

Alter an existing column NULL to NOT NULL

In this scenario, we are changing a column from nullable to non-nullable. Things get a little tricky here

The first if statement act as a precaution to check if the column exists, because we cannot modify a column if it does not exist and it will throw error and causing the script to stop.

The second if statement depends on what you are modifying from. If we were changing nvarchar(50) to nvarchar(max), we would have written it differently. Also, depending on your technical requirement, you may want to force the column to be nvarchar(max) regardless, or it may not be an issue at all. It really DEPENDS!!

If exists(Select * from sys.columns where Name = N'Keyword' and Object_ID = Object_ID(N'SearchTerm'))

Begin

If not exists(Select * from sys.columns where Name = N'Keyword' and Object_ID = Object_ID(N'SearchTerm') and Is_nullable = 0)

Begin

Alter Table dbo.SearchTerm

Alter column Keyword nvarchar(max) NOT NULL

Print 'INFO - Column Keyword is altered for table SearchTerm successfully.'

End

Else

Begin

Print 'WARNING - Column Keyword is already non-nullable for table SearchTerm.'

End

End

Else

Begin

Print 'ERROR - Column Keyword does not exists in table SearchTerm.'

End

Go

For argument sake, should I also be checking if table exists when I am adding a column to a table too? That's right. It will throw error if we try to add a column to a non-existing table. In my technical situation though, I know I am expecting the table there, but that would be a nice pre-caution. Therefore, it DEPENDS on your technical structure.

Alter an existing column TEXT to VARCHAR(MAX)

Slightly different approach to the above. I join the system types table to find out the definition of the column.

If exists( Select * from sys.columns C

join sys.types T on T.system_type_id = C.system_type_id

where C.Object_ID = Object_ID(N'MyTable')

and C.Name = 'Description'

and T.Name = 'text')

Begin

Alter Table dbo.MyTable

Alter column [Description] varchar(max) NULL

Print 'INFO - Column Description is altered for table MyTable successfully.'

End

Else

Begin

Print 'WARNING - Column Description is already not text type for table MyTable.'

End

Go

Insert Data with Identity Insert

Notice you must have the keyword

go after turning identity insert on/off, but since you can't have

go inside begin/end, the statements will be outside of the if-block.

SET IDENTITY_INSERT [dbo].[TaxCategory] ON

GO

If not exists (Select 1 from TaxRate where TaxCategoryId = 6 and CountryId = 6)

Begin

INSERT [dbo].[TaxRate] ([TaxCategoryId], [CountryId], [StateProvinceId], [Zip], [Percentage], [StoreId]) VALUES (6, 6, 0, NULL, CAST(10.0000 AS Decimal(18, 4)), 0)

Print 'INFO - Data for TaxRate.[TaxCategoryId] = 6 and TaxRate.[CountryId] = 6 inserted successfully.'

End

Else

Begin

Print 'WARNING - Data for TaxRate.[TaxCategoryId] = 6 and TaxRate.[CountryId] = 6 already exists.'

End

GO

SET IDENTITY_INSERT [dbo].[TaxCategory] OFF

GO

Update Data - Exact match

If not exists (Select 1 from Country where Published = 1 and TwoLetterIsoCode = 'AU')

Begin

Update Country

Set Published = 1

where TwoLetterIsoCode = 'AU'

Print 'INFO - Data for Country.Published = 1 for TwoLetterIsoCode = ''AU'' updated successfully'

End

Else

Begin

Print 'WARNING - Data for Country.Published = 1 for TwoLetterIsoCode = ''AU'' already updated.'

End

GO

Update Data - Multiple matches

The if clause is slightly different to above. It checks for

if exists not equal to instead of

if not exists equal to. That is because we want to update all the records as long as there is one or more than one record is returned when the where clause match.

If exists (Select 1 from Country where Published = 1 and TwoLetterIsoCode <> 'AU')

Begin

Update Country

Set Published = 0

where TwoLetterIsoCode <> 'AU'

Print 'INFO - Data for Country.Published = 0 for TwoLetterIsoCode <> ''AU'' updated successfully'

End

Else

Begin

Print 'WARNING - Data for Country.Published = 0 for TwoLetterIsoCode <> ''AU'' already updated.'

End

GO

Delete with conditions

If exists (Select 1 from [ShippingMethod] where [Name] = 'Australia Post, Regular Parcels')

Begin

Delete [ShippingMethod]

where [Name] <> 'Australia Post, Regular Parcels'

Print 'INFO - Data for ShippingMethod <> ''Australia Post, Regular Parcels'' deleted successfully.'

End

Else

Begin

Print 'INFO - Data for ShippingMethod <> ''Australia Post, Regular Parcels'' already deleted.'

End

GO

Conclusion

.Net developers might already notice I adopted some of the .Net logging technique in the print messages. That's right, I would look for warning or error (nothing fatal here) after running my script. The initial investment of a deep level logging would pay off easily in future troubleshooting. This is something that I would not do 7 years ago when I was not influenced by web developments.

I hope I have demonstrated enough as an appetizer of what re-runnable SQL scripts look like.

There is no hard rock science about what and how many checks to put in the where clause. It is up to the judgement call of the developer.