Scope

My

photography website was developed by using Bootstrap 2.x. Here are some technical details about my site.

- A small website with less than 10 HTML pages.

- Hosted on AWS S3 with static content only.

- Since it is on S3, there are no backend languages allowed. C# or php are off the limit.

- Contact Us form is a 3rd party service iframed somewhere else.

I was quite happy with it for several reasons.

- Responsiveness.

- Simple and efficient with no backend developments.

- Extremely cheap and fast with AWS S3.

- Does the job well for my purpose.

- UX score of 100 by Google PageSpeed Insight

Unfortunately, it has some downsides though.

-

There are no one header and footer file for the site. Therefore, a lot of copy and paste to do between pages just for the same piece of code.

- The hover status of the nav bar is hardcoded. :(

- CSS and JS are not optimized.

Recently my designer did a makeover design for my site as she described my site as "dull and boring" :) I thought I might as well upgrade my site to a newer version of Bootstrap as it is more mobile friendly..

Bootstrap

Firstly, we will

download our Bootstrap source and extract to our local folder.

According to the spec, I could just use the Bootstrap distribution version for my purpose, but as a developer, I wanted to see what's in the goody bag by getting my hands dirty.

Sass (Syntactically Awesome StyleSheets)

Setup

Firstly, we need to

install Ruby. Then we will use Gem to install the sass package.

$ gem install sass

Now we will be able to use Sass to compile our css. There are many ways in

using Sass.

And a more advanced approach will be using the

Compass.

$ gem install compass

$ cd $/bootstrap.sass/assets

$ compass init

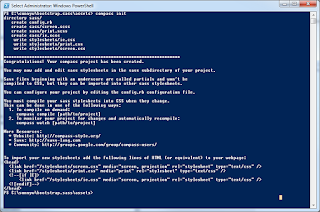

After we run these commands, it will install the compass and initialize a compass config file with some stub css files. A message in the console would look like this.

In order to work with the Bootstrap structure, I moved the stub files from $/sass to $/stylesheets, and changed the path as follow.

http_path = "/"

css_dir = "css"

sass_dir = "stylesheets"

images_dir = "images"

javascripts_dir = "javascripts"

Compass commands are quite straight forward. The following command will compile the sass files manually.

$ compass compile

We can also use the watch command to recompile automatically when there are changes made to the the sass files.

$ compass watch

Minification

To minify the css files, we can add a

compressed switch in the compile command.

$ compass compile -s compressed

Or we can change the default setting in the config.rb file.

output_style = :compressed

I have also created a callback function that will generate different files for development and production environment by passing in a different switch.

In my config.rb, I will set my output style depending on the environment, and we will append the .min naming convention when compressing.

output_style = (environment == :production) ? :compressed : :expanded

on_stylesheet_saved do |file|

if File.exists?(file) && (output_style == :compressed)

filename = File.basename(file, File.extname(file))

FileUtils.mv(file, css_dir + "/" + filename + ".min" + File.extname(file))

puts " minify " + css_dir + "/" + filename + ".min" + File.extname(file)

end

end

We can now control what to output by switching between development (default) and production.

Map File

In order to generate the map files, it can be done via a switch.

compass compile --sourcemap

Or in my config.rb.

sourcemap = (environment == :production)

CSS Vendor Prefixes

In order to deal with the

CSS Vendor Prefixes, and because we

chose not to use the Gruntfile from bootstrap, we need to integrate our own

Autoprefixer.

By following the

Autoprefixer Rails guide, we will install the gem.

$ gem install autoprefixer-rails

And we will add a

callback in our compass config.rb as follow.

require 'autoprefixer-rails'

on_stylesheet_saved do |file|

css = File.read(file)

map = file + '.map'

if File.exists? map

result = AutoprefixerRails.process(css,

from: file,

to: file,

map: { prev: File.read(map), inline: false })

File.open(file, 'w') { |io| io << result.css }

File.open(map, 'w') { |io| io << result.map }

else

File.open(file, 'w') { |io| io << AutoprefixerRails.process(css) }

end

end

Testing it by creating a new test.sass as follow.

a {

display: flex;

}

After we compile, we now have a vendor prefix css generated.

/* line 1, ../stylesheets/test.scss */

a {

display: -webkit-box;

display: -webkit-flex;

display: -ms-flexbox;

display: flex;

}

Compile

In our screen.scss (generated by compass init), we will add this line. This will import the partial bootstrap scss to our screen.scss. We can add our custom scss in this file later on.

@import "bootstrap";

Migration

There are comprehensive

migration guide done by the Bootstrap team. There are also

conversion tool available that will do the boring part of the find and replace job.

AngularJS

I am also ditching the default bootstrap jquery and using AngularJS.

It is just a nicer way for me to manage header and footer without using any backend language like C# or

php.

This can be achieved by using

UI Bootstrap if done

correctly.

I need to make some small changes in our html to remove the default bootstrap / jquery js and include angular and ui bootstrap js.

<!-- include angular js -->

<script src="js/angular.min.js"></script>

<!-- include ui bootstrap -->

<script src="js/ui-bootstrap-tpls-1.3.1.min.js"></script>

<!-- remove jquery and bootstrap js -->

<script src="js/jquery-1.9.1.min.js"></script>

<script src="js/bootstrap.min.js"></script>

Now I am able to use ng-include to add header and footer.

<div ng-include = "'_header.html'"></div>

Also, an angular based carousel that can render my image gallery.

<div id="carousel">

<uib-carousel active="active">

<uib-slide ng-repeat="f in selected.files track by f" index="f">

<img ng-src="img/{{ selected.folder }}/{{ f }}.jpg" />

</uib-slide>

</uib-carousel>

</div>