Scope

Recently having issues with the integration with our payment gateway provider. Occasionally our synchronize call to their server will not get a response back.

We are to write a schedule function to run regularly. It will pull out a list of pending orders and re-run the authorize and capture.

We are:-

- using nopCommerce 3.3

- utilizing the nopCommerce Schedule Task

- using plugin approach

Technical Overview

We are to use the Nop.Services.Tasks.Task and ScheduleTaskService to create a Nop Schedule Task that runs periodically.

Implementation

Task

Firstly

create a new plugin. In our plugin, we will create a

MyTask class that implements

ITask.

The only method in the interface is Execute(). This is the place where we will put our calling codes. In this example, I am calling my own method QueryPendingOrders().

IoC

In my example, I am using a new service class, so I will need to register the service class via IoC in our plugin. A new DependencyRegistrar class will do the trick.

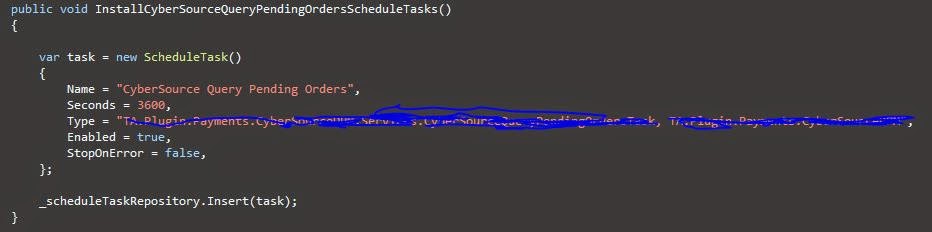

Install Schedule Task

Next, we need to create a schedule task for the task. This can be done by

the overrides of Nop.Core.Plugins.BasePlugin, In the Install() of my plugin, we will call the following.

Calling Method

For the purpose of demonstration, I am just writing to the log.

Schedule

After installing the plugin, a new schedule task is created as follow. (I have changed the run period to 60 seconds for demo)

Log

Let it run for a few minutes and check the log. It seems quite spot on that it is called every 60 seconds.

Conclusion

There was not much work involved to create a schedule task. All the magic are already done and made available for us from the nopCommerce.

During development, I noticed the TaskManager utilize singleton pattern. It is then responsible to instantiate the instances for the TaskThread. The task threads will run continuously and kick off the Execute() periodically.

One thing I wanted to do is to compare the performance between Nop Schedule Task vs SQL Server Agent Job. I have a feeling that the sql job may run a little faster as the nop task thread is not a push notification, but simply a continuous running thread.